Facebook unveiled new features today for Facebook Groups that empower administrators to encourage anonymous posting and make subgroups. This announcement completely ignores the problems plaguing the groups, such as “harmful topic communities” that are at risk for violence and administrators who use tactics to avoid content moderation.

On November 4, Facebook announced new tools for Facebook Groups at its Facebook Communities Summit and in an accompanying blog post. These features allow moderators to create a “customizable greeting message” for new members “to automatically receive” and allows members to customize post formats and give other members community awards. Notably, the announcement revealed additional tools for group administrators that will allow them to unlock a lot more features that were typically allowed only for specific group types; Facebook is also letting them make subgroups, have community chats and recurring events, and use preset “feature sets,” including one with anonymous posting.

By giving administrators more power and access to more features, Facebook is ignoring all of the significant problems with its groups, particularly that “harmful topic communities” and administrators abuse the features they already have.

The company is reportedly aware that “harmful topic communities” -- such as those dedicated to the QAnon conspiracy theory, COVID-19 denial, “Stop the Steal,” and anti-vaccine efforts -- can lead to offline violence or harm. But Facebook has repeatedly failed to remove many groups that seemingly violate its policies related to COVID-19, vaccine, and election misinformation -- even when the violative groups are brought to its attention. In fact, Media Matters reported on over 1,000 groups dedicated to such misinformation that were active as of last week.

Facebook also already gives administrators a lot of responsibility, which some abuse. In fact, these administrators often use various evasion tactics to avoid content moderation and encourage members to do so as well. These tactics include using code words, creating backup groups, creating networks of groups, changing to more innocuous group names, and putting more extreme content either in the comments or on alternative platforms like Telegram, MeWe, and Gab. Administrators acknowledge that Facebook has the biggest reach, so it is important for them to maintain a presence on the platform, even if more incendiary content has to be on other platforms with even less policy enforcement.

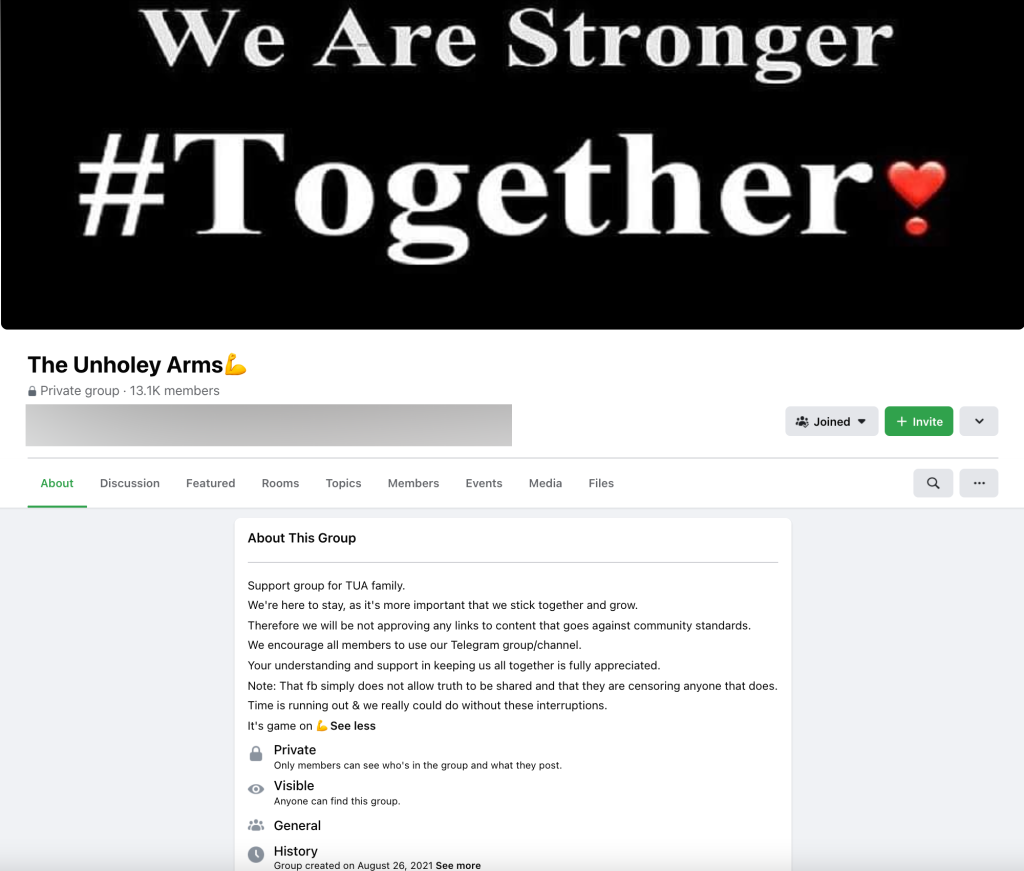

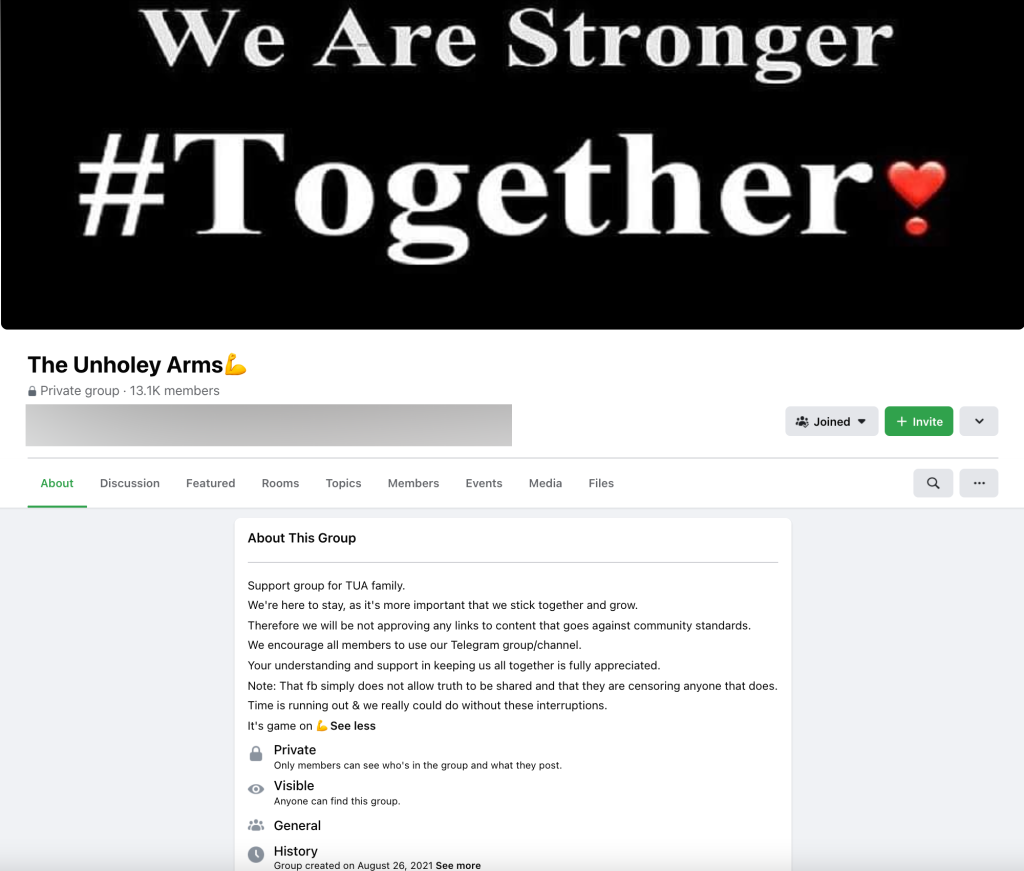

As but one example, The Unvaccinated Arms group has had at least four iterations, with Facebook removing and reinstating at least one group and eventually removing all restrictions on it. Although two versions of this group are now archived, there are two active private versions that encourage their over 36,000 combined members to use a Telegram channel for any links so that the group can “stick together and grow” without raising suspicion on Facebook.

Administrators are also able to make groups “private” and even “hidden” -- which renders them invisible to anyone not already a member -- making them harder to monitor and moderate. (Tools that researchers use to analyze content on the site, such as CrowdTangle, include only publicly available posts from public groups, pages, and profiles.)

Giving groups and administrators more tools and features seems poised to only make things worse.