Melissa Joskow / Media Matters

Over the past month, Facebook has drawn international attention for its slow response to hate speech and fake news that helped fuel the genocide of the ethno-religious Rohingya minority in Myanmar; for the correlation found in Germany between Facebook usage and hate crimes against refugees; and for the fake news that has gone viral on the Facebook subsidiary WhatsApp and led to deadly attacks in India.

But, in the U.S., the criticisms of the social media giant that have dominated media coverage have dealt with baseless claims of censorship targeting conservatives. In a July study, Media Matters showed that the highest performing political content actually comes from right-leaning pages. And a sample of pages we identified as regularly pushing right-wing memes had the highest engagement numbers overall, boasting more than twice the weekly interactions of nonpartisan political news pages.

In another recent study, Media Matters tracked narratives pushed by these right-wing meme pages and found that they often use false news and extremist rhetoric to push smears against immigrants and advocate for laws that negatively affect minorities. Facebook users who subscribe to these pages are being fed recycled -- and bigoted-- talking points, including that immigrants are taking government dollars from children and veterans; President Donald Trump’s inhumane immigration policies are part of an anti-Trump conspiracy; and racist voter ID laws are the solution to supposed mass voter fraud. The collective followership of these pages is in the hundreds of millions, and based on top comments to their right-wing meme posts, there appears to be a feedback loop in which commenters echo the language they see in the posts.

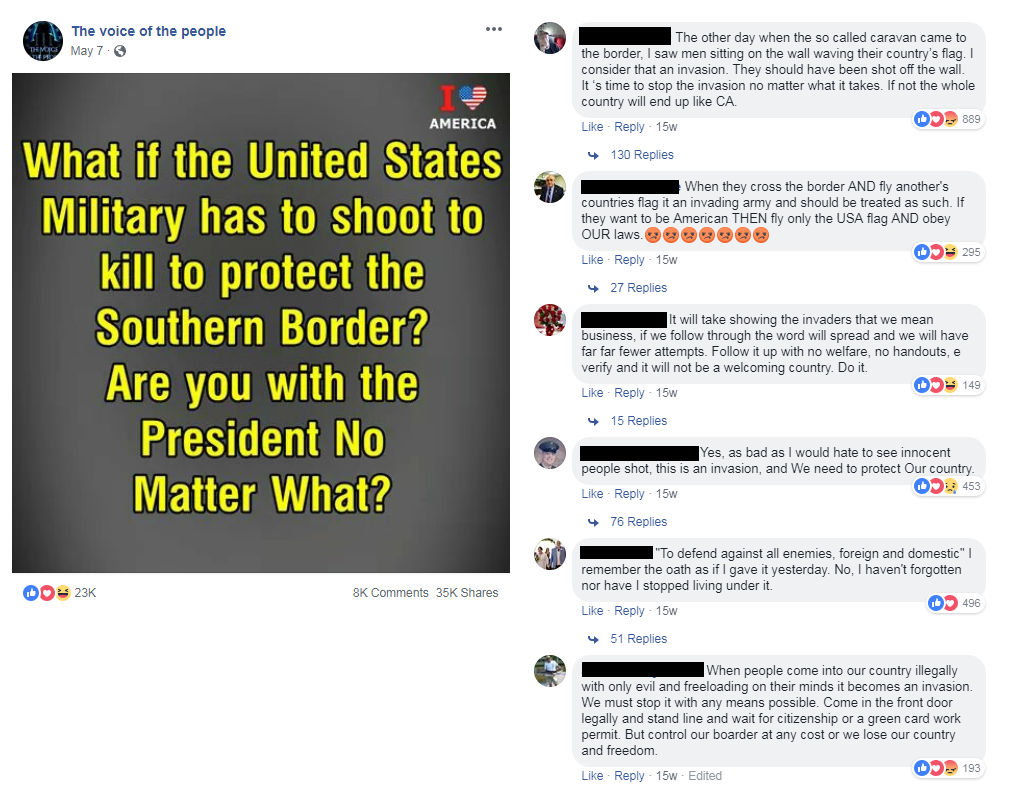

Every now and then, Facebook will take down a particularly detestable piece of right-wing content, like a meme that says Muslims shouldn’t be allowed in Congress, or one with a no symbol over the word “Islam.” But Facebook’s content policies fail to address the way individual posts contribute to a larger, violent narrative. In a study tackling content posted by right-wing meme pages, Media Matters found a subset of anti-immigrant memes that were especially vicious. These memes go viral about once a month on some of the most popular right-wing meme pages; they ask their followers if they support militarizing the border and shooting unarmed undocumented immigrants who are trying to cross. The top reponses on these types of anti-immigrant posts supported shooting undocumented immigrants, and most referenced a cultural “invasion” and tied undocumented people to “welfare” programs. These commenters were borrowing language frequently used by right-wing meme pages and using that wording to justify the cold-blooded murder of undocumented immigrants out of fear they would use government benefits.

Facebook sometimes removes bad actors following specific backlash over a specific page or individual. But the tech platform does nothing to address extremist pages operating as coordinated networks, acting in tandem to amplify their reach. In our recent meme page study, Media Matters mapped out some networks of meme pages and groups run by fake news outlets and far-right clickbait sites. These sites depend on Facebook for online traffic, and they rely on viral meme content to boost their page visibility. Back in January, Facebook took down the official page of the racist fake news site Freedom Daily but left all the other pages in Freedom Daily’s network untouched. The people behind Freedom Daily (freedomdaily.com) seemingly made two clone sites, freedom-daily.com (which is no longer active) and mpolitical.com, which they continued linking to on the Facebook pages they used to promote Freedom Daily before. By April, the Facebook pages that were channeling traffic to freedomdaily.com by linking to it in 2017 were now linking to a new racist fake news site, rwnoffcial.com. What this example shows is that even when a page was banned from Facebook, its allied network of pages was able to direct traffic to an entirely new website in a matter of months.

It isn’t a coincidence that Facebook’s content moderation process is ineffective when it comes to moderating these extremist narratives. Most of Facebook’s responses to the spread of hate and violent fake news focus on individual posts encouraging violence, rather than on coordinated networks of bad actors driving long-term propaganda narratives. Even when Facebook takes down an individual post, the removal usually doesn’t affect the status or visibility of the page that posted it. Facebook doesn’t have a financial incentive to take down popular extremist networks pushing anti-immigrant, racist content. These pages aren’t a problem for Facebook; they’re a revenue stream. These pages and groups keep a large online community of President Donald Trump supporters engaged on the tech platform, where they consume and spread extremist content on their timelines (while clicking on ads and viewing content that Facebook gets paid to show). Simultaneously, Facebook is crucial to the business models of right-wing meme pages, as they push monetized content via racist clickbait, fake news sites, and online stores. Facebook isn’t just giving the far-right a soapbox to reach conservative communities; it’s also directly profiting from hate speech and extremist conspiracies.