As high-profile users like Kylie Jenner and Kim Kardashian criticize changes to Instagram, they fail to recognize that the platform's issues are much deeper than the new interface as its parent company repeatedly prioritizes revenue and growth over the safety of its users, recommends harmful content, and refuses to provide the public with data, policy, or algorithm transparency — all problems that will be exacerbated by the new changes.

This week, Instagram rolled out a new user interface for some of its users, with a full-screen feed, more Reels, and suggested posts. These changes — some of which will also be implemented on the Facebook app — are part of Meta’s overhaul to compete with TikTok. (Meta is Instagram’s parent company.)

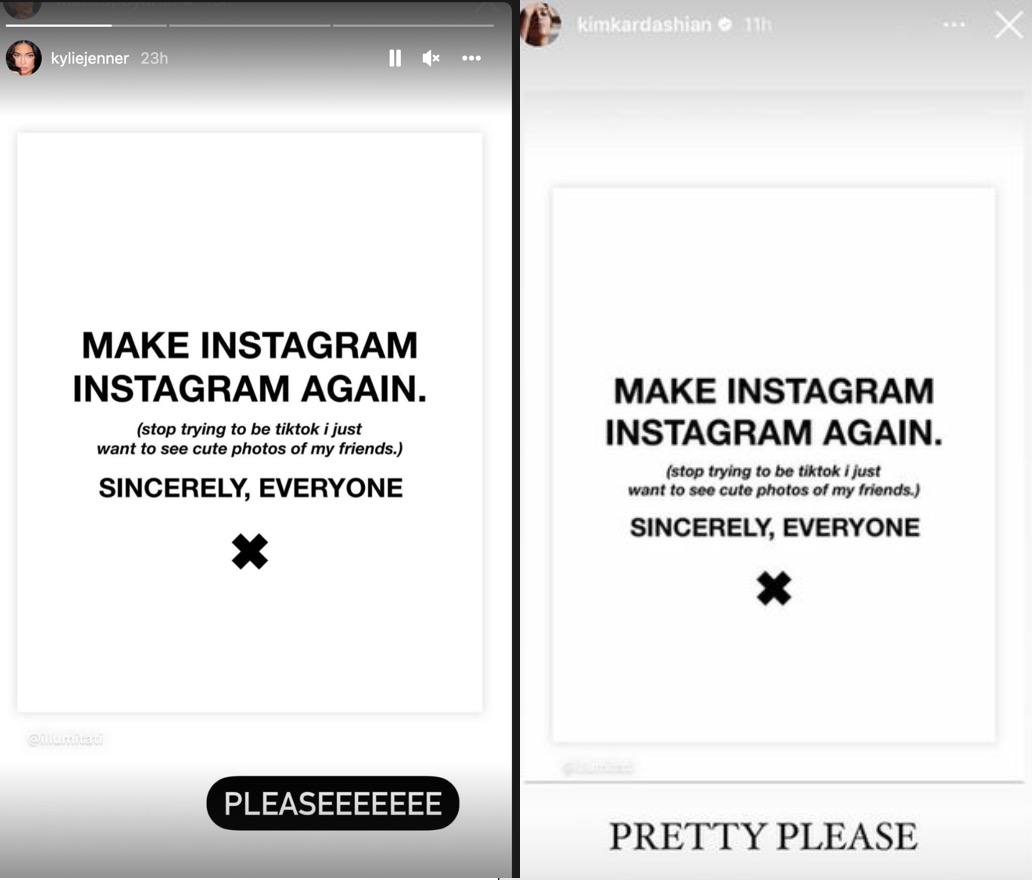

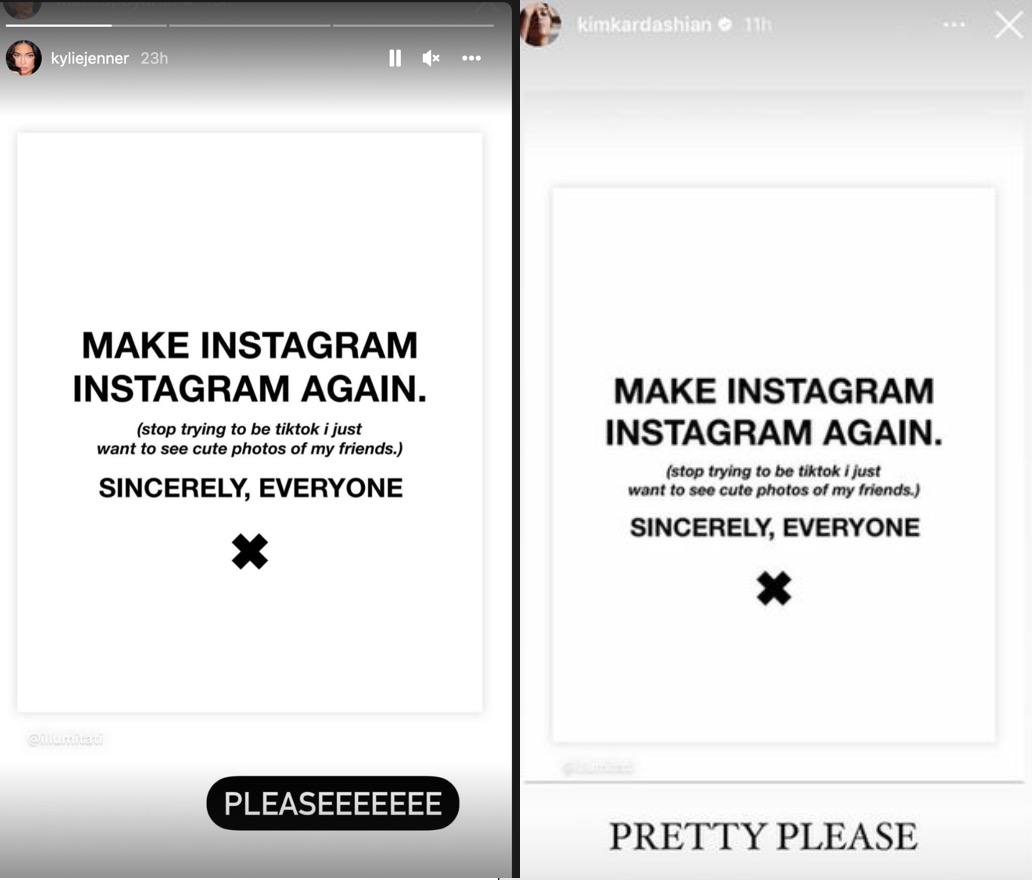

Instagram users have expressed criticism in response to these changes, particularly on the transition from photos to videos, with meme creators protesting at Meta’s New York City HQ and high-profile celebrities Jenner and Kardashian posting criticism on Instagram Stories.

Instagram CEO Adam Mosseri responded quickly to the criticism, noting, “It’s not yet good” and Instagram will “have to get it to a good place if we’re going to ship it to the rest of the Instagram Community.” Mosseri also committed to keeping photos on the platform, but admitted that “more and more of Instagram is going to become video.”

The quick response to Jenner and Kardashian’s criticism, along with Snapchat’s stock falling in 2018 after Jenner criticized the platform, shows the influence that they have on social media platforms.

While Instagram users, including Jenner and Kardashian, are mostly discussing the new video-focused feed (which is being tested on a few users), other aspects of the changes are even more problematic, as users are forced to engage with videos on the feed and posts are suggested to users based on a recommendation algorithm.

With the influence Jenner and Kardashian wield on the platform, here are some of the other problems plaguing Instagram, and being worsened by its new features, that should also be on their radar:

Meta prioritizes revenue and growth over the safety of its users

Meta’s advertising system leverages its platforms’ vast trove of data to create personalized ads targeting each user. But, as the company developed its ad model, it ultimately prioritized revenue and growth over the safety of its users, as users engaged with sensational content and engagement turned into profit.

In the test feed on Instagram, there is reportedly a change from the classic infinite scroll to post-by-post scrolling, forcing users to engage with each image or video completely before moving on. Among these posts are advertisements, which reportedly appear every fourth post, and instead of quickly scrolling past the ad, it takes up the full display in the test feed and forces the user to engage with the ad, as they must touch the screen to dismiss it.

Meta has a history of recommending harmful content

Facebook and Instagram’s recommendation systems have been known to quickly lead users to extreme content and misinformation, even as Meta claims that its Recommendations Guidelines are “designed to maintain a higher standard” than its Community Guidelines. In fact, Facebook’s own internal research demonstrated how quickly its algorithms recommended QAnon-related groups and other extreme groups to a new account:

In summer 2019, a new Facebook user named Carol Smith signed up for the platform, describing herself as a politically conservative mother from Wilmington, North Carolina. Smith’s account indicated an interest in politics, parenting and Christianity and followed a few of her favorite brands, including Fox News and then-President Donald Trump.

Though Smith had never expressed interest in conspiracy theories, in just two days Facebook was recommending she join groups dedicated to QAnon, a sprawling and baseless conspiracy theory and movement that claimed Trump was secretly saving the world from a cabal of pedophiles and Satanists.

Smith didn’t follow the recommended QAnon groups, but whatever algorithm Facebook was using to determine how she should engage with the platform pushed ahead just the same. Within one week, Smith’s feed was full of groups and pages that had violated Facebook’s own rules, including those against hate speech and disinformation.

On Instagram, the “similar account suggestions” algorithm has recommended accounts that share misinformation, particularly anti-vaccine accounts. Instagram also has an Explore page, which it describes as “a discovery surface where Instagram sources content from across the platform based on a given person’s interest.” As the platform further expands into algorithm-based recommendations, it should consider the issues that it has already had with the Explore page, including its algorithm which has promoted dangerous weight loss content pushing restrictive diets and potentially dangerous supplements.

In addition to the Explore page, Instagram is testing suggested posts in the feed. In the test feed, the suggested posts reportedly have no indicator except for a “follow” button next to the account name, rather than saying “Suggested for you” at the top of the post. Thus, a user will see the content first and then potentially see the little indicator that it is not a post from an account that the user follows.

In its latest earnings call, Meta revealed that AI-driven recommendations would be rapidly increasing this year.

The change to recommended content from accounts the user doesn’t follow comes as Facebook previously prioritized “meaningful posts from their friends and family in News Feed” because the platform “was built to bring people closer together and build relationships” and needed to get a handle on misinformation proliferating on the platform.

Instagram's new changes highlight Meta’s lack of transparency

Meta’s policies are often unclear and narrowly interpreted. On Instagram, they are so unclear that the platform has had to publicly clarify what they mean. A BBC review showed that Instagram and 14 other social media sites “had policies that were written at a university reading level,” even though children as young as 13 were allowed to use the platform. Facebook has also been criticized for its vague policies, with its Oversight Board — whose members' salaries are funded by the platform — calling the company out for its lack of transparency.

Reports have recently revealed that Meta has once again prioritized its public image over data transparency, as it seeks to limit and eventually phase out CrowdTangle, a data analytics tool that allows users to search public social media posts, even as it often relies on researchers to detect violations.

In addition to its lack of policy and data transparency, Meta’s algorithms, particularly ranking and recommendation algorithms, are a black box to the public, as even lawmakers have struggled to get details on platform algorithms. Instagram has remained vague about the details of the algorithms used for content in the test feed.