TikTok’s “For You” page (FYP) recommendation algorithm appears to be leading users down far-right rabbit holes. By analyzing and coding over 400 recommended videos after interacting solely with transphobic content, Media Matters traced how TikTok’s recommendation algorithm quickly began populating our research account’s FYP with hateful and far-right content.

TikTok has long been scrutinized for its dangerous algorithm, viral misinformation, and hateful video recommendations, yet this new research demonstrates how the company’s recommendation algorithm can quickly radicalize a user’s FYP.

Transphobia is deeply intertwined with other kinds of far-right extremism, and TikTok’s algorithm only reinforces this connection. Our research suggests that transphobia can be a gateway prejudice, leading to further far-right radicalization.

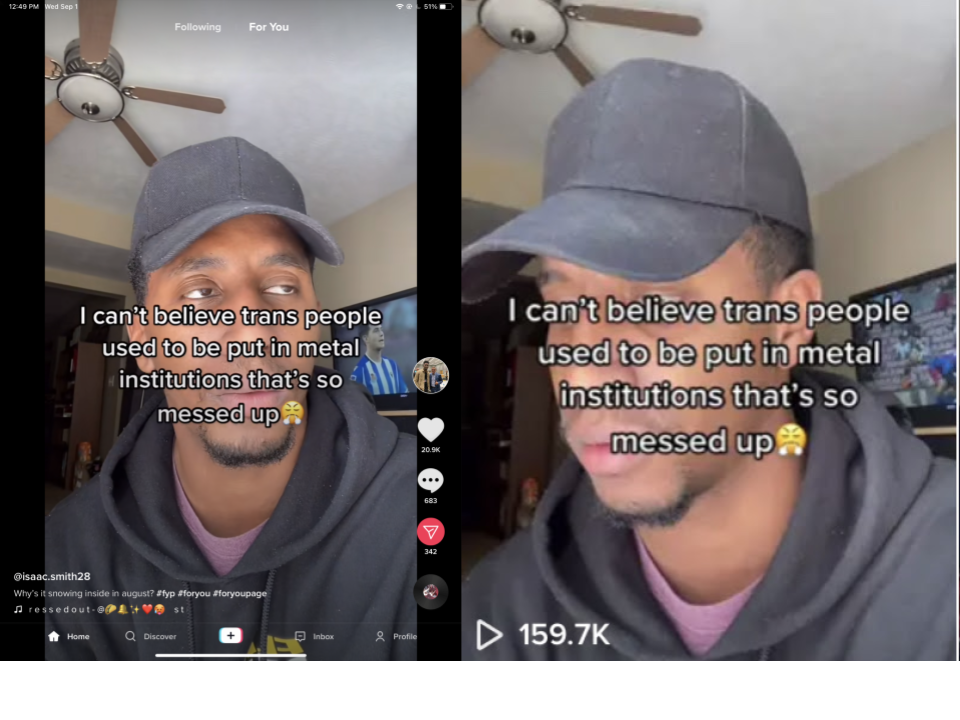

To assess this phenomenon, Media Matters created a new TikTok account and engaged only with content we identified as transphobic. This included accounts that had posted multiple videos which degrade trans people, insist that there are “only two genders,” or mock the trans experience. We coded approximately the first 450 videos fed to our FYP. Even though we solely interacted with transphobic content, we found that our FYP was increasingly populated with videos promoting various far-right views and talking points.

That content did include additional transphobic videos, even though such content violates TikTok’s “hateful behavior” community guidelines, which state that the platform does not permit “content that attacks, threatens, incites violence against, or otherwise dehumanizes an individual or group on the basis of” attributes including gender and gender identity.

Key Findings

- After we interacted with anti-trans content, TikTok’s recommendation algorithm populated our FYP feed with more transphobic and homophobic videos, as well as other far-right, hateful, and violent content.

- Exclusive interaction with anti-trans content spurred TikTok to recommend misogynistic content, racist and white supremacist content, anti-vaccine videos, antisemitic content, ableist narratives, conspiracy theories, hate symbols, and videos including general calls to violence.

- Of the 360 total recommended videos included in our analysis, 103 contained anti-trans and/or homophobic narratives, 42 were misogynistic, 29 contained racist narratives or white supremacist messaging, and 14 endorsed violence.